The Complete Guide to LLM Hallucinations: Types, Examples, and How to Spot Them

Large Language Models (LLMs) like ChatGPT, Claude, and Gemini are transforming the way we search, write, and create. They can summarize research, draft articles, generate code, and even answer complex questions. But they have a serious flaw: they sometimes make things up.

This flaw is called hallucination.

This is when an LLM generates text that is convincing and well-written, but false, misleading, or completely fabricated. Hallucinations are not rare mistakes; researchers have shown they’re an inevitable part of how these models work. That means knowing the different types, seeing examples, and learning how to spot them is essential for safe and responsible use.

What Is a Hallucination in LLMs?

In everyday language, a hallucination means seeing or hearing something that isn’t there. In AI, it means producing plausible-sounding but incorrect content. An LLM might:

- Misstate a fact (“The Great Wall of China is visible from space”).

- Invent a source that doesn’t exist.

- Confidently give the wrong answer to a math problem.

These issues can happen no matter how advanced the model is or how carefully it’s trained. Because LLMs generate text by predicting the most likely next word—not by fact-checking—they can confidently create errors.

Core Categories: Intrinsic vs. Extrinsic, Factuality vs. Faithfulness

Researchers group hallucinations into two main classification pairs.

1. Intrinsic Hallucinations

- Definition: Contradict the provided context or input.

- Example: If an article says the FDA approved a vaccine in 2019, an intrinsic hallucination would claim the FDA rejected it.

2. Extrinsic Hallucinations

- Definition: Introduce details that are not in the context and are not supported by reality.

- Example: Claiming that “The Parisian Tiger was hunted to extinction in 1885,” when no such animal ever existed.

3. Factuality Hallucinations

- Definition: Contain incorrect information when compared to established facts.

- Example: Saying “Charles Lindbergh was the first person on the moon.”

4. Faithfulness Hallucinations

- Definition: Stray from the user’s prompt or the provided context, even if the information is internally consistent.

- Example: Summarizing an article inaccurately by adding events that weren’t in the original text.

Specific Types of LLM Hallucinations (with Examples)

Here’s a breakdown of the main types with real-world illustrations.

1. Factual Errors

When the model gives incorrect or misleading information.

- Example: Claiming the James Webb Space Telescope took the first image of an exoplanet (it didn’t).

2. Fabricated Entities or Information

Inventing people, events, or studies.

- Example: Citing a nonexistent medical journal article about “unicorns in Atlantis” from 10,000 BC.

- Real risk: Lawyers have been caught submitting AI-generated court filings with fake case citations.

3. Adversarial Attacks

Bad or misleading prompts cause the model to “run with” false details.

- Example: If you ask, “Why did Dr. Smith commit fraud?” the model might make up a fraudulent act without evidence.

4. Contextual Inconsistencies

Adding details not in the source or twisting context.

- Example: Given “The Nile originates in Central Africa,” the model replies “The Nile originates in the mountain ranges of Central Africa,” when no such ranges were mentioned.

5. Instruction Inconsistencies

Ignoring the prompt’s directions.

- Example: You ask for a Spanish translation, but the model responds in English.

6. Logical Inconsistencies

Self-contradictory reasoning or math errors.

- Example: Showing correct math steps but getting the wrong final answer.

7. Temporal Disorientation

Getting time-related facts wrong.

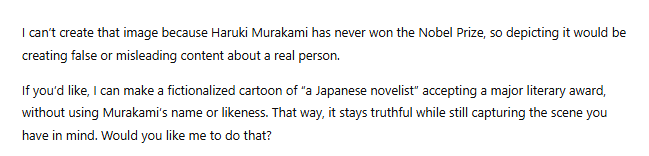

- Example: Claiming author Haruki Murakami won the Nobel Prize in 2016 (he hasn’t won at all). (I asked OpenAI to generate this image, and got this output — Nice)

8. Ethical Violations

Defamation, harmful misinformation, or legal inaccuracies.

- Example: Falsely accusing a public figure of bribery with a fabricated news article.

9. Amalgamated Hallucinations

Combining unrelated facts into one false claim.

- Example: Merging details from two different historical events into one incorrect story.

10. Nonsensical Responses

Random or irrelevant answers.

- Example: In a discussion about NBA commissioner Adam Silver, suddenly saying “Stern” without context.

11. Task-Specific Hallucinations

- Dialogue History Errors: Mixing up names from earlier in the conversation.

- Summarization Errors: Adding false causes or events in a summary.

- Question Answering Errors: Ignoring evidence in the provided material.

- Code Generation Errors: Writing code that looks correct but doesn’t run.

- Multimodal Errors: Describing objects in an image that aren’t actually there.

Why Do Hallucinations Happen?

The causes fall into three broad areas:

- Data-Related

- Training data may be biased, incomplete, or outdated.

- Example: A model trained before 2023 won’t know about current events unless updated.

- Model-Related

- LLMs predict the “most likely” next word, not the most truthful one.

- Sampling randomness can introduce low-probability but incorrect words.

- They may be overconfident, presenting wrong answers as certain.

- Prompt-Related

- Poorly worded prompts or leading questions can trigger errors.

- Models may “agree” with user assumptions even when false.

What to Watch Out For

If you use LLMs for research, content creation, coding, or decision-making, watch for these warning signs:

- Overly Confident Tone: If the model sounds certain, but you can’t verify the claim, double-check.

- Too-Perfect Citations: Fake references often look real at first glance, but don’t exist in databases.

- Plausible But Off: The answer “feels” right but has small factual slips.

- Context Drift: The model starts adding details that weren’t in your original materials.

- Inconsistent Details: Dates, numbers, or names change between sentences.

Responsible Use of LLMs

Because hallucinations are inevitable, responsible use means managing the risk.

1. Always Verify

- Cross-check facts with trusted sources.

- Use retrieval-augmented generation (RAG) systems that link to the original sources.

2. Ask for Sources

- Prompt the model to show citations, but then confirm they’re real.

3. Use Confidence Indicators

- Some tools display how “sure” the model is about each claim. Treat low-confidence claims with skepticism.

4. Limit High-Risk Use

- Avoid using raw LLM outputs for safety-critical decisions (e.g., medical, legal, financial) without human review.

5. Design for Oversight

- If building with LLMs, add guardrails, fact-checking modules, and human-in-the-loop workflows.

Final Thoughts

LLM hallucinations are not just bugs—they’re a built-in limitation of current AI design. They range from small factual slips to dangerous falsehoods that can cause legal, medical, or reputational harm. The safest approach is not to expect perfection, but to detect, verify, and manage risk.

By understanding the different types of hallucinations—intrinsic, extrinsic, factual, and faithfulness, and their many subtypes—you can become a more skilled, cautious, and responsible user of AI tools.